AWS Bedrock: 7 Powerful Reasons to Use This Revolutionary AI Platform

Imagine building cutting-edge AI applications without managing a single server. That’s the promise of AWS Bedrock—a fully managed service that’s changing how developers access and deploy foundation models. Let’s dive into why it’s a game-changer.

What Is AWS Bedrock and Why It Matters

AWS Bedrock is Amazon Web Services’ innovative platform that provides developers with easy, scalable access to a wide range of foundation models (FMs) from leading AI companies. It eliminates the complexity traditionally associated with deploying large language models (LLMs) and generative AI systems, allowing businesses to innovate faster and with less overhead.

Definition and Core Purpose

AWS Bedrock acts as a serverless, fully managed interface to foundation models, enabling users to access, fine-tune, and deploy models without managing infrastructure. It’s designed for developers, data scientists, and enterprises looking to integrate generative AI into their applications securely and efficiently.

- Provides a unified API to interact with multiple FMs

- Supports models from AI21 Labs, Anthropic, Cohere, Meta, and Amazon’s own Titan

- Enables rapid prototyping and deployment of AI-powered features

“AWS Bedrock simplifies the integration of generative AI, making it accessible even to teams without deep machine learning expertise.” — AWS Official Documentation

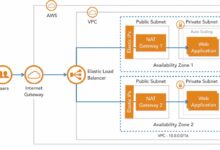

How AWS Bedrock Fits into the AWS Ecosystem

Built on the robust AWS cloud infrastructure, AWS Bedrock seamlessly integrates with other AWS services like Amazon SageMaker, AWS Lambda, Amazon API Gateway, and Amazon VPC. This integration allows for end-to-end AI application development, from data ingestion to model deployment and monitoring.

- Leverages AWS Identity and Access Management (IAM) for secure access control

- Uses Amazon CloudWatch for logging and performance tracking

- Integrates with AWS KMS for data encryption and compliance

This tight integration ensures that AI workflows are not only powerful but also secure, scalable, and compliant with enterprise standards.

Key Features That Make AWS Bedrock Stand Out

AWS Bedrock isn’t just another AI service—it’s a comprehensive platform engineered for flexibility, security, and ease of use. Its feature set is tailored to meet the demands of modern AI development, from rapid experimentation to production-grade deployment.

Access to Multiple Foundation Models

One of the most compelling aspects of AWS Bedrock is its support for a diverse portfolio of foundation models. Users can choose models based on their specific use case, whether it’s natural language generation, text summarization, or code generation.

- Anthropic’s Claude: Known for its strong reasoning and safety features, ideal for customer service chatbots and content moderation.

- AI21 Labs’ Jurassic-2: Excels in complex language tasks and domain-specific applications like legal or medical text analysis.

- Cohere’s Command: Optimized for enterprise search, summarization, and multilingual support.

- Meta’s Llama 2 and Llama 3: Open-source models that offer transparency and customization, perfect for developers who want control over model behavior.

- Amazon Titan: AWS’s proprietary models designed for summarization, classification, and embedding generation with built-in safety filters.

This model diversity allows organizations to avoid vendor lock-in and select the best tool for each job. You can test different models via the AWS Management Console or API and compare performance side-by-side.

Serverless Architecture and Scalability

AWS Bedrock operates on a serverless model, meaning there’s no infrastructure to provision or manage. This architecture automatically scales to handle traffic spikes, making it ideal for applications with variable workloads.

- No need to manage GPUs, clusters, or container orchestration

- Automatic scaling based on request volume

- Pay-per-use pricing model reduces cost overhead

For example, a customer support chatbot powered by AWS Bedrock can handle thousands of concurrent users during peak hours without manual intervention, then scale down during off-peak times—saving costs and ensuring reliability.

Security, Privacy, and Compliance

Security is baked into AWS Bedrock from the ground up. All data processed through the service remains private and is not used to train the underlying models, addressing a major concern for enterprises in regulated industries.

- Data is encrypted in transit and at rest using AWS KMS

- No persistent storage of customer prompts or responses

- Supports private VPC endpoints to keep traffic within your network

- Compliant with HIPAA, GDPR, SOC, and other standards

This makes AWS Bedrock suitable for healthcare, finance, legal, and government applications where data sensitivity is paramount.

How AWS Bedrock Compares to Alternatives

While several platforms offer access to foundation models, AWS Bedrock stands out due to its integration, flexibility, and enterprise-grade features. Let’s compare it to key competitors.

AWS Bedrock vs. Azure OpenAI Service

Microsoft’s Azure OpenAI Service provides access to OpenAI models like GPT-4 but is limited to OpenAI’s offerings. In contrast, AWS Bedrock offers a broader range of models from multiple providers, giving users more choice and flexibility.

- AWS Bedrock supports open models like Llama 3; Azure OpenAI does not

- Bedrock allows fine-tuning and customization; Azure OpenAI has stricter usage policies

- Both integrate with their respective cloud ecosystems, but AWS offers deeper VPC and IAM integration

For organizations wanting to avoid dependency on a single AI vendor, AWS Bedrock is the more strategic choice.

AWS Bedrock vs. Google Vertex AI

Google Vertex AI offers a robust AI platform with access to PaLM 2 and other models. However, it’s more tightly coupled with Google’s ecosystem and less open in terms of model selection.

- Vertex AI emphasizes Google’s proprietary models; AWS Bedrock promotes multi-vendor access

- Bedrock supports more open-source models, enabling greater transparency

- Vertex AI has strong MLOps tools, but Bedrock integrates better with serverless and event-driven architectures

If your organization is already on AWS, Bedrock provides a more seamless and cost-effective path to AI adoption.

AWS Bedrock vs. Self-Hosted LLMs

Some companies opt to self-host models like Llama or Falcon using Kubernetes or SageMaker. While this offers full control, it comes with significant operational complexity.

- Self-hosting requires GPU management, scaling logic, and monitoring

- Bedrock eliminates infrastructure management, reducing time-to-market

- Self-hosted models may violate licensing if not configured properly; Bedrock ensures compliance by default

For most use cases, AWS Bedrock offers a faster, safer, and more cost-efficient alternative to self-hosting.

Use Cases and Real-World Applications of AWS Bedrock

The versatility of AWS Bedrock makes it applicable across industries. From automating customer service to enhancing content creation, the platform empowers businesses to innovate at scale.

Customer Support and Chatbots

One of the most common uses of AWS Bedrock is building intelligent chatbots that can understand and respond to customer inquiries in natural language.

- Integrate with Amazon Connect for voice and chat support

- Use Claude or Titan models to generate empathetic, context-aware responses

- Reduce agent workload by handling routine queries automatically

For example, a telecom company used AWS Bedrock to deploy a chatbot that resolved 60% of customer issues without human intervention, improving response time and satisfaction.

Content Generation and Marketing

Marketing teams leverage AWS Bedrock to generate product descriptions, social media posts, email campaigns, and blog content at scale.

- Use Cohere’s Command model for multilingual content creation

- Generate SEO-friendly articles using prompt engineering

- Personalize messaging based on customer segments

A retail brand automated 80% of its product listing content using AWS Bedrock, cutting content creation time from weeks to hours.

Data Analysis and Summarization

Enterprises use AWS Bedrock to extract insights from large volumes of unstructured text, such as customer feedback, legal documents, or research papers.

- Summarize long documents into concise reports

- Classify support tickets by urgency or topic

- Extract key entities and sentiments from reviews

A financial institution used Bedrock to analyze quarterly earnings calls, identifying trends and risks in real time—something that previously took days of manual review.

Getting Started with AWS Bedrock: A Step-by-Step Guide

Ready to try AWS Bedrock? Here’s how to get up and running in minutes.

Setting Up Your AWS Bedrock Environment

To begin, ensure you have an AWS account with appropriate permissions. AWS Bedrock is available in select regions, so check the official AWS Bedrock page for availability.

- Navigate to the AWS Management Console

- Search for “Bedrock” and open the service

- Request access to desired foundation models (some require approval)

- Set up IAM roles with permissions for bedrock:InvokeModel and bedrock:ListFoundationModels

Once approved, you can start testing models directly in the console.

Invoking a Model via API

The most common way to use AWS Bedrock is through its API. Here’s a Python example using Boto3:

import boto3

import json

def invoke_claude():

client = boto3.client('bedrock-runtime')

model_id = 'anthropic.claude-v2'

prompt = 'Write a short poem about the cloud.'

body = json.dumps({

"prompt": f"nnHuman: {prompt}nnAssistant:",

"max_tokens_to_sample": 300

})

response = client.invoke_model(

body=body,

modelId=model_id,

accept='application/json',

contentType='application/json'

)

response_body = json.loads(response['body'].read())

return response_body['completion']

print(invoke_claude())This script sends a prompt to Anthropic’s Claude model and returns the generated text. You can customize the prompt, model, and parameters based on your needs.

Fine-Tuning Models for Specific Tasks

While foundation models are powerful out of the box, fine-tuning can improve performance on domain-specific tasks. AWS Bedrock supports fine-tuning for select models like Amazon Titan and Jurassic-2.

- Prepare a dataset of input-output pairs relevant to your task

- Upload the data to Amazon S3

- Use the Bedrock console or API to start a fine-tuning job

- Deploy the customized model for inference

Fine-tuning can improve accuracy by 20-40% in specialized domains like legal contract analysis or medical coding.

Best Practices for Maximizing AWS Bedrock Performance

To get the most out of AWS Bedrock, follow these proven strategies for efficiency, cost control, and reliability.

Optimize Prompt Engineering

The quality of your output depends heavily on how you structure your prompts. Use clear, specific instructions and provide examples when possible.

- Use system prompts to set context (e.g., “You are a helpful assistant.”)

- Include few-shot examples to guide model behavior

- Avoid ambiguous language that can lead to hallucinations

For instance, instead of “Summarize this,” say “Summarize the following customer review in one sentence, focusing on sentiment and key issue.”

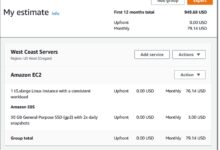

Monitor Usage and Costs

AWS Bedrock charges based on the number of tokens processed. Monitor usage closely to avoid unexpected costs.

- Use AWS Budgets to set spending alerts

- Enable CloudWatch metrics for request volume and latency

- Cache frequent responses to reduce redundant calls

One company reduced its Bedrock costs by 35% simply by caching common FAQ responses using Amazon ElastiCache.

Ensure Data Privacy and Governance

Even though AWS Bedrock doesn’t store your data, you should still implement governance policies.

- Mask or redact sensitive information before sending to the model

- Use VPC endpoints to keep traffic internal

- Audit model usage with AWS CloudTrail

These practices help maintain compliance and build trust with stakeholders.

The Future of AWS Bedrock and Generative AI

AWS Bedrock is not static—it’s evolving rapidly alongside advances in AI. Amazon continues to add new models, features, and integrations to strengthen its position in the generative AI race.

Upcoming Features and Roadmap

AWS has hinted at several enhancements in the pipeline:

- Support for multimodal models (text + image generation)

- Enhanced fine-tuning capabilities for more model types

- Integration with Amazon Q, AWS’s AI-powered assistant for developers

- Improved agent frameworks for multi-step reasoning and tool use

These updates will make AWS Bedrock even more powerful for building complex AI workflows.

Impact on Enterprise AI Adoption

By lowering the barrier to entry, AWS Bedrock is accelerating enterprise AI adoption. Companies no longer need large AI teams or massive budgets to experiment with cutting-edge models.

- Democratizes access to state-of-the-art AI

- Reduces time-to-market for AI products from months to days

- Encourages innovation through rapid prototyping

As more organizations adopt AWS Bedrock, we’ll see a surge in AI-powered applications across healthcare, education, finance, and beyond.

How AWS Bedrock Shapes the AI Ecosystem

AWS Bedrock promotes an open, multi-vendor AI ecosystem. By supporting models from Anthropic, Meta, and others, Amazon is fostering competition and innovation rather than pushing a single proprietary solution.

- Encourages model providers to improve performance and safety

- Empowers customers to choose based on merit, not lock-in

- Drives down costs through market competition

This approach benefits the entire AI community by promoting transparency, interoperability, and ethical development.

What is AWS Bedrock?

AWS Bedrock is a fully managed service that provides access to a range of foundation models for building generative AI applications without managing infrastructure. It supports models from Anthropic, AI21, Cohere, Meta, and Amazon, and integrates seamlessly with the AWS ecosystem.

How much does AWS Bedrock cost?

Pricing is based on the number of input and output tokens processed. Costs vary by model—check the AWS Bedrock pricing page for detailed rates. For example, using Claude Instant costs $0.80 per million input tokens and $2.40 per million output tokens.

Can I fine-tune models in AWS Bedrock?

Yes, AWS Bedrock supports fine-tuning for select models like Amazon Titan and AI21’s Jurassic-2. You can upload your dataset and customize the model for specific tasks such as classification or domain-specific text generation.

Is my data safe in AWS Bedrock?

Absolutely. AWS does not store or use your data to train foundation models. All data is encrypted, and you can deploy models within a VPC for added security. AWS Bedrock complies with major regulatory standards like HIPAA and GDPR.

Which models are available on AWS Bedrock?

AWS Bedrock offers models from leading AI companies, including Anthropic’s Claude, AI21’s Jurassic-2, Cohere’s Command, Meta’s Llama 2 and Llama 3, and Amazon’s Titan series. New models are added regularly.

Amazon Web Services’ AWS Bedrock is redefining how businesses leverage generative AI. With its serverless architecture, multi-model support, enterprise-grade security, and seamless AWS integration, it offers a powerful, flexible, and cost-effective platform for AI innovation. Whether you’re building chatbots, automating content, or analyzing data, AWS Bedrock provides the tools to bring your ideas to life—fast. As the platform evolves, it will continue to lower barriers, drive adoption, and shape the future of AI across industries.

Recommended for you 👇

Further Reading: